At the heart of modern computing is a groundbreaking idea inspired by nature. These mathematical models are essential AI building blocks. They help machines understand information in ways that feel almost human.

They work like our brains, making systems that can learn from data. This is the base of advanced artificial intelligence systems. These systems change how we use technology.

These models are very good at recognising things and making choices. They have layers of nodes, similar to biological neural networks.

In many fields, these systems lead to new ideas through learning. They mark a big change in how machines learn and decide on their own.

What is a Neural Network in Computer Science

Neural networks are a key innovation in computer science. They allow systems to learn and adapt over time. This makes them a core part of artificial intelligence, helping machines understand patterns and solve problems.

Basic Definition and Purpose

A neural network is made up of nodes called artificial neurons. These nodes are connected in layers. This setup lets the system learn from data and get better with time.

The main goal of neural networks is to handle complex tasks that other algorithms can’t. They’re great at tasks like recognizing images and understanding speech. This is because they can find patterns in big datasets.

Today’s neural network architecture has an input layer, hidden layers, and an output layer. Each connection between neurons has a weight that changes during training. This helps the network get better at making predictions.

Historical Development

The history of neural networks is long and exciting. It shows how ideas have turned into real AI systems. This journey has been driven by better computers and a deeper understanding of math.

Early Theoretical Foundations

In 1943, Warren McCulloch and Walter Pitts wrote a key paper. They showed how artificial neurons could do logical tasks. This laid the groundwork for future work.

This early work inspired others to study how artificial networks could work like the brain. Even with old computers, these pioneers set important principles for later work in biological neural networks simulation.

Key Milestones in Evolution

In 1958, Frank Rosenblatt created the perceptron. This was a single-layer neural network that could learn to classify patterns. It was a big hit with researchers and the public.

The 1980s saw a big leap with backpropagation algorithms. These algorithms helped train multi-layer networks. They made it possible for these systems to learn complex things.

Recently, deep learning has made huge strides. These networks, with many hidden layers, have done amazing things in areas like computer vision and natural language processing.

| Year | Development | Key Contributors | Significance |

|---|---|---|---|

| 1943 | First mathematical model of artificial neurons | McCulloch and Pitts | Established theoretical foundation |

| 1958 | Perceptron invention | Frank Rosenblatt | First trainable neural network |

| 1986 | Backpropagation popularisation | Rumelhart, Hinton, Williams | Enabled efficient multi-layer training |

| 2012 | Deep learning breakthrough | Krizhevsky et al. | Demonstrated superior image recognition |

These milestones show how ideas turned into real systems. Each step built on the last, making neural network architecture more advanced today.

The history of neural networks is a mix of biology and math. It shows how combining these fields has led to powerful AI tools. This mix continues to push AI research and applications forward.

Biological Basis of Neural Networks

The amazing skills of artificial neural networks come from the human brain. This natural system is the blueprint for today’s AI systems.

Inspiration from Human Neurons

Biological neurons are the main idea behind artificial neural networks. These cells handle information with electrical and chemical signals. This makes the human nervous system complex.

Each biological neuron has three key parts:

- Dendrites that get signals

- Cell body that processes these signals

- Axon that sends signals to other neurons

This design inspired the way artificial neurons work. It shows how simple parts can make complex systems.

Simulating Neural Processes

Artificial neural networks copy how biological neurons work. They use math and models to do this. This makes digital versions that can learn and change.

The machine learning process in artificial networks is like how we learn. Both make strong connections and weak ones. This helps them get better with time.

Biological and artificial systems share some key traits:

- They send signals through connected parts

- Connections have different weights

- They adapt to patterns

- They get better with practice

But, there are big differences too. Biological neurons are much more complex and efficient. Artificial systems use simpler math to mimic nature.

This natural inspiration keeps pushing AI forward. Scientists are always looking to biology for new ideas.

Core Components and Architecture

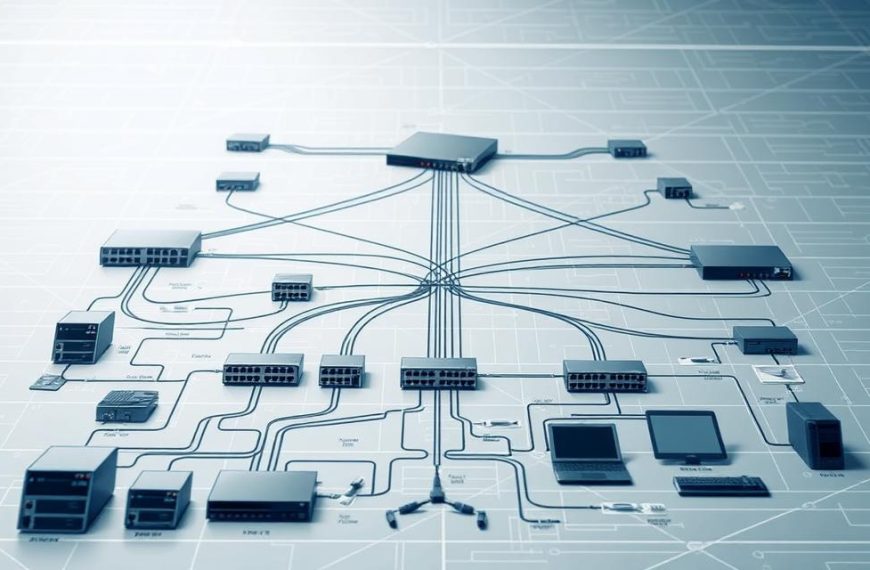

Neural networks have key parts that work together. They help machines learn and understand complex patterns. This structure is at the heart of today’s artificial intelligence.

Neurons, Layers, and Connections

Artificial neurons are organised into layers in neural networks. The input layer gets raw data. Hidden layers do complex calculations. The output layer gives the final results.

Each neuron is connected to others through pathways with weights. These weights decide how information moves through the network. This setup lets the network transform input data into useful outputs.

Simple networks have a few hidden layers. But deep learning networks have many. This depth helps them learn abstract features from data. The layered structure is like how our brains process information.

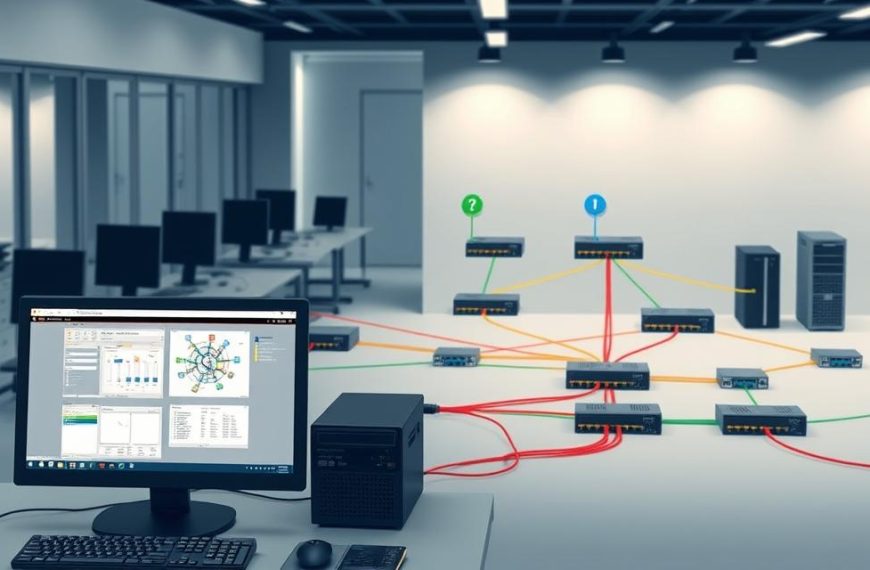

Weights, Biases, and Learning Mechanisms

Weights show how strong the connections are between neurons. They affect how much one neuron influences another. Networks adjust these weights to get better.

Biases help models fit the data better. They let neurons activate even with zero inputs. Together, weights and biases are what the network learns.

Learning happens through backpropagation and gradient descent. Backpropagation finds out how each parameter affects the error. Gradient descent then tweaks the parameters to reduce the error.

These steps help deep learning networks get better over time. They keep improving until they do well on the training data.

Activation Functions and Their Roles

Activation functions decide if a neuron should activate. They add non-linearity, allowing for complex pattern recognition. Without them, networks could only learn simple relationships.

Different functions have different roles. ReLU is popular for hidden layers in deep learning. It outputs zero for negative inputs and the input value for positive inputs.

Other functions like sigmoid and hyperbolic tangent have their uses. The choice of function affects how fast the network learns and its final performance.

| Activation Function | Mathematical Formula | Primary Advantages | Common Applications |

|---|---|---|---|

| ReLU (Rectified Linear Unit) | f(x) = max(0, x) | Computationally efficient, reduces vanishing gradient | Hidden layers in deep networks |

| Sigmoid | f(x) = 1 / (1 + e^{-x}) | Outputs between 0-1, smooth gradient | Binary classification output layers |

| Tanh (Hyperbolic Tangent) | f(x) = (e^{x} – e^{-x}) / (e^{x} + e^{-x}) | Outputs between -1-1, zero-centered | Hidden layers in RNNs |

These parts work together to make deep learning networks effective. The design of the architecture affects what patterns the network can learn. Understanding these elements helps us see how artificial intelligence makes decisions and predictions.

Types and Applications of Neural Networks

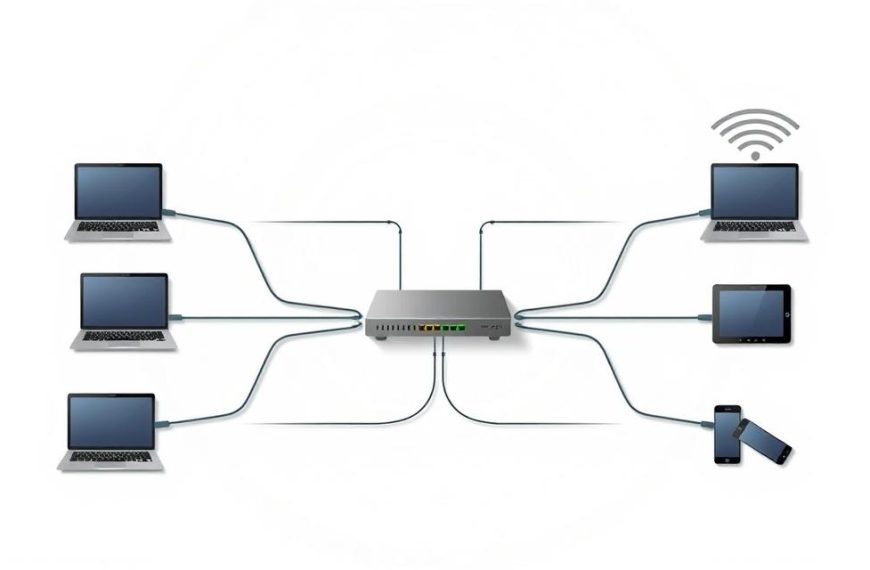

Neural networks have grown into many types, each solving different problems. They show how artificial intelligence can tackle real-world issues in many fields.

Feedforward and Convolutional Neural Networks

Feedforward neural networks are simple, with data flowing one way. They’re great at spotting patterns and classifying things. This makes them key in computer science applications.

Convolutional neural networks (CNNs) changed how we process images. They use special filters to find edges and shapes. This helps them do better in visual tasks.

CNNs have made medical imaging better. They help doctors find things in X-rays and MRI scans that are hard to see. Their accuracy is a big help in healthcare.

Recurrent and Specialised Neural Networks

Recurrent neural networks (RNNs) can handle data that comes in a sequence. They remember what they’ve seen before. This makes them good for analysing time series and understanding language.

Long Short-Term Memory (LSTM) networks are a type of RNN. They’re better at remembering things over a long time. LSTMs are key for speech and text prediction.

New types of neural networks keep coming up. Each one is good for different computer science applications. For example, generative adversarial networks make fake data, and transformer networks are behind modern language models.

Real-World Uses in AI Systems

Neural networks are changing many industries. Banks use them to spot fraud and make trades. They’re very good at finding suspicious activity.

Self-driving cars use neural networks to navigate and avoid obstacles. They look at sensor data to make decisions. Modern cars are getting safer thanks to these systems.

Streaming services and online shops use neural networks to suggest things. They look at what you watch and buy to recommend more. This shows how useful neural networks are in making money.

Virtual assistants and translation tools use neural networks to understand and create human language. These tools are getting better all the time.

Neural networks come in many types, solving complex problems. They’re used in healthcare and finance, showing AI’s growing power. As neural networks get better, we’ll see even more computer science applications in the future.

Conclusion

Neural networks are key to today’s artificial intelligence. They’ve changed many fields, from health to self-driving cars. Their journey shows how much they’ve impacted computer science.

Training neural networks well lets them do amazing things. They get better at spotting patterns and making choices. This growth from simple to complex learning is exciting.

As we face new challenges, we need to keep improving neural networks. They must learn in new ways to tackle harder tasks. The future looks bright for AI, with neural networks leading the way.